Implementing Contextual Bandits: Complete Algorithm Guide

Part 3 of 5: From Theory to Code

TL;DR: This post provides complete, production-ready implementations of the essential contextual bandit algorithms: ε-greedy (baseline), UCB/LinUCB (confidence-based), and Thompson Sampling (Bayesian). Includes algorithm selection guide with default hyperparameters, when to use each method, and practical tuning strategies.

Reading time: ~25 minutes

Introduction: From Theory to Practice

Parts 1 and 2 covered when to use contextual bandits and the mathematical foundations. Now it’s time to implement.

This post provides complete, working code for the core algorithms you’ll actually deploy:

- ε-greedy: Simplest baseline (start here)

- UCB/LinUCB: Confidence-based exploration (production workhorse)

- Thompson Sampling: Bayesian posterior sampling (often best empirically)

Each algorithm includes:

- Full Python implementation

- When to use it

- Hyperparameter defaults and tuning

- Strengths and limitations

By the end, you’ll know which algorithm fits your problem and have code ready to run.

Algorithm Selection: Quick Start Guide

Before diving into implementations, use this table to pick your starting point:

| Your Situation | Recommended Algorithm | Default Hyperparameters | Why This Works |

|---|---|---|---|

| Few actions (K < 20), no context | ε-greedy or UCB1 | ε = 0.1 with decay 1/t | Simple, interpretable, proven |

| Few actions, simple context (d < 50) | LinUCB | α = 1.0, λ = 1.0 | Efficient, confidence-based exploration |

| Medium context (d = 50-500), linear | LinUCB or Linear Thompson | α = 1.0, λ = 1.0, σ = 1.0 | Balance of efficiency and exploration |

| High-dim context (d > 500), linear | LinUCB with feature selection | α = 0.5, λ = 10.0 | Regularization prevents overfitting |

| Non-stationary environment | ε-greedy (constant) or Discounted TS | ε = 0.15, γ = 0.9999 | Continuous exploration adapts to drift |

| Need interpretability | LinUCB | α = 1.0, λ = 1.0 | Can examine learned weights |

| Maximum performance | Thompson Sampling | Use defaults from algorithm | Best empirical results |

Start simple: Begin with ε-greedy to validate your setup. Upgrade to LinUCB or Thompson Sampling once you’ve confirmed the basics work.

ε-greedy: The Simplest Baseline

ε-greedy is the go-to baseline. It’s easy to implement, easy to explain to stakeholders, and works reasonably well.

Algorithm

At each round t:

1. With probability ε: Choose random action (explore)

2. With probability 1-ε: Choose action with highest estimated reward (exploit)

3. Observe reward, update estimates

Mathematical Formulation

\arg\max_{a'} \hat{\mu}_{a'}(x) & \text{with probability } 1-\varepsilon \\ \text{uniform}(A) & \text{with probability } \varepsilon \end{cases}$$ ### Complete Implementation ```python import numpy as np class EpsilonGreedy: """ ε-greedy bandit for multi-armed bandit (no context). Simple baseline: explores randomly with probability ε, exploits best action with probability 1-ε. """ def __init__(self, n_actions, epsilon=0.1): """ Args: n_actions: Number of available actions epsilon: Exploration probability (0 = pure exploit, 1 = pure explore) """ self.n_actions = n_actions self.epsilon = epsilon # Track statistics for each action self.counts = np.zeros(n_actions) # Number of times each action tried self.values = np.zeros(n_actions) # Estimated mean reward for each action def select_action(self, context=None): """ Select action using ε-greedy strategy. Args: context: Ignored for basic ε-greedy (non-contextual) Returns: action: Index of chosen action (0 to n_actions-1) """ if np.random.random() < self.epsilon: # Explore: choose random action return np.random.randint(self.n_actions) else: # Exploit: choose action with highest estimated value return np.argmax(self.values) def update(self, action, reward): """ Update estimates based on observed reward. Args: action: Action that was taken reward: Observed reward """ self.counts[action] += 1 n = self.counts[action] # Incremental mean update: μ_new = μ_old + (reward - μ_old) / n self.values[action] += (reward - self.values[action]) / n def get_state(self): """Return current state for logging/debugging.""" return { 'counts': self.counts.copy(), 'values': self.values.copy(), 'epsilon': self.epsilon } # Example usage if __name__ == "__main__": # Simulate 3 actions with different true rewards true_rewards = [0.3, 0.5, 0.7] # Action 2 is best n_rounds = 1000 bandit = EpsilonGreedy(n_actions=3, epsilon=0.1) total_reward = 0 for t in range(n_rounds): # Select action action = bandit.select_action() # Simulate reward (Bernoulli with true probability) reward = 1 if np.random.random() < true_rewards[action] else 0 # Update bandit bandit.update(action, reward) total_reward += reward print(f"Total reward: {total_reward}/{n_rounds}") print(f"Average reward: {total_reward/n_rounds:.3f}") print(f"Optimal average: {max(true_rewards):.3f}") print(f"\nLearned values: {bandit.values}") print(f"Action counts: {bandit.counts}") ``` ### Decaying Exploration Fixed ε wastes exploration forever. Better to decay over time: ```python class DecayingEpsilonGreedy(EpsilonGreedy): """ ε-greedy with decaying exploration rate. Starts with high exploration, gradually increases exploitation. """ def __init__(self, n_actions, epsilon_0=1.0, decay_rate=0.01, min_epsilon=0.01): """ Args: epsilon_0: Initial exploration probability decay_rate: How fast to decay (higher = faster decay) min_epsilon: Minimum exploration probability """ super().__init__(n_actions, epsilon=epsilon_0) self.epsilon_0 = epsilon_0 self.decay_rate = decay_rate self.min_epsilon = min_epsilon self.t = 0 def select_action(self, context=None): """Select action with decaying ε.""" # Update epsilon: ε(t) = max(min_ε, ε₀ / (1 + decay_rate * t)) self.t += 1 self.epsilon = max( self.min_epsilon, self.epsilon_0 / (1 + self.decay_rate * self.t) ) return super().select_action(context) ``` ### When to Use ε-greedy **✅ Use when:** - You need a simple, interpretable baseline - Explaining the algorithm to stakeholders - Testing your infrastructure before deploying complex methods - Environment is non-stationary (constant ε helps track drift) - Many actions (simpler than maintaining confidence bounds for thousands of actions) **❌ Limitations:** - Explores uniformly (wastes trials on clearly bad actions) - Suboptimal regret: O(T^(2/3)) vs O(√T) for UCB/Thompson - Requires tuning ε (too high wastes traffic, too low risks convergence) ### Hyperparameter Tuning | Parameter | Typical Range | Too Low → Problem | Too High → Problem | |-----------|---------------|-------------------|-------------------| | **ε (exploration)** | 0.05 - 0.2 | Premature convergence | Wasted traffic on bad actions | | **decay_rate** | 0.001 - 0.1 | Too slow adaptation | Stops exploring too quickly | | **min_epsilon** | 0.01 - 0.05 | Eventually stops learning | Never fully exploits | **Rule of thumb:** - **Stationary environment:** Start ε = 0.1, decay to min = 0.01 - **Non-stationary:** Use constant ε = 0.15 (no decay) - **Quick testing:** ε = 0.2 (more exploration, faster learning signal) --- ## UCB: Confidence-Based Exploration UCB (Upper Confidence Bound) improves on ε-greedy by directing exploration toward **uncertain** actions, not random ones. ### Algorithm (UCB1 for MAB) ```python At each round t: 1. Compute UCB for each action: UCB(a) = μ̂_a + sqrt(2 log t / n_a) 2. Choose action with highest UCB 3. Observe reward, update estimates ``` ### Implementation ```python class UCB1: """ UCB1 algorithm for multi-armed bandits. Uses optimism under uncertainty: adds confidence bonus to each action's estimate, naturally balancing exploration and exploitation. """ def __init__(self, n_actions): """ Args: n_actions: Number of available actions """ self.n_actions = n_actions self.counts = np.zeros(n_actions) self.values = np.zeros(n_actions) self.t = 0 # Total rounds def select_action(self, context=None): """ Select action using UCB strategy. Returns: action: Index of action with highest UCB """ self.t += 1 # Phase 1: Try each action once (initialization) for a in range(self.n_actions): if self.counts[a] == 0: return a # Phase 2: Compute UCB for each action ucb_values = np.zeros(self.n_actions) for a in range(self.n_actions): # UCB = estimate + confidence bonus confidence_bonus = np.sqrt(2 * np.log(self.t) / self.counts[a]) ucb_values[a] = self.values[a] + confidence_bonus return np.argmax(ucb_values) def update(self, action, reward): """Update estimates based on observed reward.""" self.counts[action] += 1 n = self.counts[action] self.values[action] += (reward - self.values[action]) / n def get_ucb_values(self): """Return current UCB values for debugging.""" if self.t == 0: return np.zeros(self.n_actions) ucb_values = np.zeros(self.n_actions) for a in range(self.n_actions): if self.counts[a] > 0: confidence_bonus = np.sqrt(2 * np.log(self.t) / self.counts[a]) ucb_values[a] = self.values[a] + confidence_bonus return ucb_values ``` ### Visual Intuition Remember from Part 2: UCB gives higher bonuses to under-explored actions. ```mermaid %%{init: { 'theme':'base', 'themeVariables': { 'primaryColor':'#0b1220', 'primaryTextColor':'#e5e7eb', 'primaryBorderColor':'#10b981', 'lineColor':'#06b6d4', 'fontSize':'14px', 'fontFamily':'monospace' } }}%% graph LR subgraph Action_A[" "] A_Est[Estimate: 0.5<br/>Trials: 100<br/>Bonus: +0.1] A_UCB[UCB: 0.6<br/>Well-explored] end subgraph Action_B[" "] B_Est[Estimate: 0.4<br/>Trials: 10<br/>Bonus: +0.3] B_UCB[UCB: 0.7<br/>⚡ Selected] end A_Est --> A_UCB B_Est --> B_UCB B_UCB -.chosen.-> Winner[Action B wins<br/>despite lower estimate] style Action_A fill:none,stroke:none style Action_B fill:none,stroke:none style A_Est fill:#334155,stroke:#10b981,color:#d1fae5,stroke-width:2px style A_UCB fill:#1e293b,stroke:#10b981,color:#d1fae5,stroke-width:2px style B_Est fill:#334155,stroke:#f59e0b,color:#fde68a,stroke-width:2px style B_UCB fill:#1e293b,stroke:#f59e0b,color:#fde68a,stroke-width:2.5px style Winner fill:#1e293b,stroke:#06b6d4,color:#cffafe,stroke-width:2px linkStyle 2 stroke:#06b6d4,stroke-width:2px,stroke-dasharray:5 ``` ### When to Use UCB1 **✅ Use when:** - Stochastic rewards (i.i.d. from fixed distributions) - Moderate number of actions (K = 2-100) - Want theoretical guarantees (provable regret bounds) - No context available (pure MAB problem) **❌ Limitations:** - No context (doesn't use side information) - Assumes stationarity (struggles when distributions drift) - Can over-explore compared to Thompson Sampling --- ## LinUCB: UCB for Contextual Bandits LinUCB extends UCB to contextual bandits with linear reward models. This is the **production workhorse** for most applications. ### Algorithm ```python At each round t: 1. Observe context x_t 2. For each action a: - Estimate θ̂_a via ridge regression - Compute confidence radius: σ_a(x) = α · sqrt(x^T A_a^-1 x) - Compute UCB: UCB_a(x) = θ̂_a^T x + σ_a(x) 3. Choose a_t = argmax_a UCB_a(x_t) 4. Observe reward, update estimates ``` ### Complete Implementation ```python class LinUCB: """ Linear Upper Confidence Bound algorithm. Assumes linear reward model: r(x,a) = θ_a^T x + ε Uses ridge regression to estimate parameters and confidence ellipsoids to guide exploration. """ def __init__(self, n_actions, n_features, alpha=1.0, lambda_=1.0): """ Args: n_actions: Number of available actions n_features: Dimensionality of context features alpha: Exploration parameter (higher = more exploration) lambda_: Regularization parameter (higher = more regularization) """ self.n_actions = n_actions self.n_features = n_features self.alpha = alpha self.lambda_ = lambda_ # Initialize for each action # A_a = X_a^T X_a + λI (regularized Gram matrix) # b_a = X_a^T r_a (feature-reward products) self.A = [lambda_ * np.eye(n_features) for _ in range(n_actions)] self.b = [np.zeros(n_features) for _ in range(n_actions)] def select_action(self, context): """ Select action with highest UCB. Args: context: Feature vector (array of shape [n_features]) Returns: action: Index of chosen action """ context = np.array(context).reshape(-1, 1) # Column vector ucb_values = np.zeros(self.n_actions) for a in range(self.n_actions): # Compute parameter estimate: θ̂_a = A_a^-1 b_a A_inv = np.linalg.inv(self.A[a]) theta = A_inv @ self.b[a] # Predicted reward predicted_reward = theta.T @ context # Confidence bonus: α * sqrt(x^T A_a^-1 x) confidence_bonus = self.alpha * np.sqrt( context.T @ A_inv @ context ) # UCB = predicted reward + confidence bonus ucb_values[a] = predicted_reward + confidence_bonus return np.argmax(ucb_values) def update(self, action, context, reward): """ Update estimates for chosen action. Args: action: Action that was taken context: Feature vector that was observed reward: Reward that was received """ context = np.array(context).reshape(-1, 1) # Update A_a = A_a + x x^T self.A[action] += context @ context.T # Update b_a = b_a + r x self.b[action] += reward * context.squeeze() def get_theta(self, action): """Get learned parameter vector for an action.""" A_inv = np.linalg.inv(self.A[action]) return A_inv @ self.b[action] def get_confidence_radius(self, action, context): """Get confidence radius for action in given context.""" context = np.array(context).reshape(-1, 1) A_inv = np.linalg.inv(self.A[action]) return self.alpha * np.sqrt(context.T @ A_inv @ context)[0, 0] # Example usage if __name__ == "__main__": # Simulate contextual bandit with 3 actions, 5 features n_actions = 3 n_features = 5 n_rounds = 1000 # True parameters (unknown to algorithm) true_theta = [ np.array([0.1, 0.2, -0.1, 0.3, 0.1]), # Action 0 np.array([0.3, 0.1, 0.2, -0.1, 0.2]), # Action 1 np.array([0.2, 0.3, 0.1, 0.2, 0.3]), # Action 2 ] bandit = LinUCB(n_actions, n_features, alpha=1.0, lambda_=1.0) total_reward = 0 for t in range(n_rounds): # Generate random context context = np.random.randn(n_features) # Select action action = bandit.select_action(context) # Simulate reward: r = θ_a^T x + noise true_reward = true_theta[action] @ context noise = np.random.normal(0, 0.1) reward = true_reward + noise # Update bandit bandit.update(action, context, reward) total_reward += reward print(f"Average reward: {total_reward/n_rounds:.3f}") print("\nLearned parameters vs True parameters:") for a in range(n_actions): learned = bandit.get_theta(a) print(f"Action {a}:") print(f" Learned: {learned}") print(f" True: {true_theta[a]}") print(f" Error: {np.linalg.norm(learned - true_theta[a]):.3f}") ``` ### When to Use LinUCB **✅ Use when:** - Have context features (user, item, situation) - Rewards are approximately linear in features - Need interpretable model (can examine learned θ weights) - Context dimensionality is moderate (d = 10-1000) - Want provable regret guarantees: O(d√(T log T)) **❌ Limitations:** - Assumes linear reward structure (misspecification if nonlinear) - Computational cost grows with d² (matrix inversion) - May underperform Thompson Sampling empirically ### Hyperparameter Tuning | Parameter | Typical Range | Effect | Tuning Strategy | |-----------|---------------|--------|-----------------| | **α (exploration)** | 0.1 - 2.0 | Controls exploration intensity | Start 1.0, increase if under-exploring | | **λ (regularization)** | 0.1 - 10.0 | Prevents overfitting, stabilizes estimates | Increase if high variance or correlated features | **Quick tuning:** 1. Start with α = 1.0, λ = 1.0 (defaults) 2. If regret grows linearly → increase α (more exploration) 3. If high variance in estimates → increase λ (more regularization) 4. If many features (d > 100) → use λ = 5-10 --- ## Thompson Sampling: Bayesian Posterior Sampling Thompson Sampling takes a Bayesian approach: maintain probability distributions over parameters, sample from posteriors to choose actions. ### Algorithm ```python At each round t: 1. For each action a: - Sample θ̃_a ~ P(θ_a | data) 2. Choose a_t = argmax_a θ̃_a^T x_t (using sampled parameters) 3. Observe reward, update posteriors ``` ### Bernoulli Thompson Sampling (for binary rewards) ```python class BernoulliThompsonSampling: """ Thompson Sampling for Bernoulli bandits (binary rewards). Uses Beta-Bernoulli conjugacy for efficient posterior updates. Natural exploration-exploitation balance through posterior sampling. """ def __init__(self, n_actions): """ Args: n_actions: Number of available actions """ self.n_actions = n_actions # Beta prior parameters: Beta(α, β) # Start with uniform prior: Beta(1, 1) self.alpha = np.ones(n_actions) # Successes + 1 self.beta = np.ones(n_actions) # Failures + 1 def select_action(self, context=None): """ Sample from posterior and choose action with highest sample. Returns: action: Index of chosen action """ # Sample θ̃_a ~ Beta(α_a, β_a) for each action samples = np.random.beta(self.alpha, self.beta) # Choose action with highest sampled value return np.argmax(samples) def update(self, action, reward): """ Update posterior based on observed reward. Args: action: Action that was taken reward: Binary reward (0 or 1) """ # Bayesian update for Beta-Bernoulli if reward == 1: self.alpha[action] += 1 # Observed success else: self.beta[action] += 1 # Observed failure def get_posterior_stats(self): """Return posterior means and standard deviations.""" means = self.alpha / (self.alpha + self.beta) variances = (self.alpha * self.beta) / ( (self.alpha + self.beta)**2 * (self.alpha + self.beta + 1) ) return means, np.sqrt(variances) ``` ### Gaussian Thompson Sampling (for continuous rewards) ```python class GaussianThompsonSampling: """ Thompson Sampling for Gaussian bandits (continuous rewards). Assumes rewards are normally distributed. Uses Bayesian updating for Gaussian with known variance. """ def __init__(self, n_actions, prior_mean=0.0, prior_var=1.0, noise_var=1.0): """ Args: n_actions: Number of available actions prior_mean: Prior mean for each action's reward prior_var: Prior variance for each action's reward noise_var: Known noise variance in rewards """ self.n_actions = n_actions self.noise_var = noise_var # Posterior parameters (start with prior) self.means = np.ones(n_actions) * prior_mean self.variances = np.ones(n_actions) * prior_var self.counts = np.zeros(n_actions) def select_action(self, context=None): """ Sample from Gaussian posterior for each action. Returns: action: Index of action with highest sample """ # Sample θ̃_a ~ N(μ_a, σ²_a) for each action samples = np.random.normal(self.means, np.sqrt(self.variances)) return np.argmax(samples) def update(self, action, reward): """ Bayesian update for Gaussian with known variance. Args: action: Action that was taken reward: Observed continuous reward """ # Bayesian update using precision (inverse variance) precision_prior = 1 / self.variances[action] precision_data = 1 / self.noise_var # Posterior precision = prior precision + data precision posterior_precision = precision_prior + precision_data # Posterior mean is weighted average posterior_mean = ( (precision_prior * self.means[action] + precision_data * reward) / posterior_precision ) # Update self.means[action] = posterior_mean self.variances[action] = 1 / posterior_precision self.counts[action] += 1 ``` ### Linear Thompson Sampling ```python class LinearThompsonSampling: """ Thompson Sampling for linear contextual bandits. Assumes linear reward model with Gaussian noise. Samples parameters from posterior, chooses action with highest predicted reward under sampled parameters. """ def __init__(self, n_actions, n_features, lambda_=1.0, noise_var=1.0): """ Args: n_actions: Number of available actions n_features: Dimensionality of context features lambda_: Regularization parameter (prior precision) noise_var: Noise variance in rewards """ self.n_actions = n_actions self.n_features = n_features self.lambda_ = lambda_ self.noise_var = noise_var # Sufficient statistics for each action # Same as LinUCB but interpret as posterior parameters self.A = [lambda_ * np.eye(n_features) for _ in range(n_actions)] self.b = [np.zeros(n_features) for _ in range(n_actions)] def select_action(self, context): """ Sample θ from posterior, choose action with highest θ^T x. Args: context: Feature vector Returns: action: Index of chosen action """ context = np.array(context).reshape(-1, 1) sampled_rewards = np.zeros(self.n_actions) for a in range(self.n_actions): # Posterior distribution: θ_a | data ~ N(μ_a, Σ_a) A_inv = np.linalg.inv(self.A[a]) theta_mean = A_inv @ self.b[a] theta_cov = self.noise_var * A_inv # Sample θ̃_a ~ N(μ_a, Σ_a) theta_sample = np.random.multivariate_normal(theta_mean, theta_cov) # Compute predicted reward: r̃ = θ̃_a^T x sampled_rewards[a] = theta_sample.T @ context.squeeze() return np.argmax(sampled_rewards) def update(self, action, context, reward): """ Update posterior for chosen action. Args: action: Action that was taken context: Feature vector reward: Observed reward """ context = np.array(context).reshape(-1, 1) # Same update as LinUCB (Bayesian interpretation) self.A[action] += context @ context.T self.b[action] += reward * context.squeeze() def get_posterior_params(self, action): """Get posterior mean and covariance for an action.""" A_inv = np.linalg.inv(self.A[action]) mean = A_inv @ self.b[action] cov = self.noise_var * A_inv return mean, cov ``` ### When to Use Thompson Sampling **✅ Use when:** - Want best empirical performance (often beats UCB) - Don't want to tune exploration parameters (self-tuning) - Can afford computational cost of sampling - Environment may be non-stationary (posterior naturally adapts) **❌ Limitations:** - More computationally expensive (sampling from posteriors) - Less interpretable than UCB confidence bounds - Theoretical guarantees weaker (though still optimal) ### Why Thompson Sampling Works So Well **Probability matching:** The probability of choosing action a equals the probability that a is optimal. **Natural exploration schedule:** - Early: Posteriors are diffuse → high variance in samples → exploration - Late: Posteriors concentrate → low variance → exploitation No manual decay schedule needed. The Bayesian framework handles it automatically. --- ## Algorithm Comparison ### Performance Comparison ```mermaid %%{init: { 'theme':'base', 'themeVariables': { 'primaryColor':'#0b1220', 'primaryTextColor':'#e5e7eb', 'primaryBorderColor':'#10b981', 'lineColor':'#06b6d4', 'fontSize':'14px', 'fontFamily':'monospace' } }}%% graph TB subgraph Comparison["Algorithm Performance Spectrum"] direction LR EG[ε-greedy<br/>━━━━━━━━<br/>Regret: O(T^2/3)<br/>Simple, suboptimal] UCB[UCB / LinUCB<br/>━━━━━━━━<br/>Regret: O(√T log T)<br/>Provable, interpretable] TS[Thompson Sampling<br/>━━━━━━━━<br/>Regret: O(√T)<br/>Optimal, self-tuning] end EG -.worse.-> UCB UCB -.comparable.-> TS style EG fill:#1e293b,stroke:#f59e0b,color:#fde68a,stroke-width:2px style UCB fill:#1e293b,stroke:#10b981,color:#d1fae5,stroke-width:2px style TS fill:#1e293b,stroke:#06b6d4,color:#cffafe,stroke-width:2.5px style Comparison fill:none,stroke:#64748b,stroke-width:2px linkStyle 0 stroke:#ef4444,stroke-width:2px,stroke-dasharray:3 linkStyle 1 stroke:#10b981,stroke-width:2px,stroke-dasharray:3 ``` ### Detailed Comparison Table | Algorithm | Regret | Exploration | Tuning | Computational Cost | Use When | |-----------|--------|-------------|--------|-------------------|----------| | **ε-greedy** | O(T^(2/3)) | Random | Tune ε | O(1) per round | Simple baseline, non-stationary | | **UCB1** | O(√(KT log T)) | Confidence-based | None | O(K) per round | MAB, theoretical guarantees | | **LinUCB** | O(d√(T log T)) | Confidence ellipsoid | Tune α, λ | O(d²) per round | Linear rewards, interpretability | | **Thompson (Bernoulli)** | O(√T) | Posterior sampling | None | O(K) per round | Binary rewards, best empirical | | **Thompson (Linear)** | O(√T) | Posterior sampling | Tune λ | O(d³) per round | Linear rewards, self-tuning | ### Practical Recommendation **For most production use cases:** 1. **Start:** ε-greedy with ε = 0.1 (validate infrastructure) 2. **Upgrade:** LinUCB with α = 1.0, λ = 1.0 (production baseline) 3. **Optimize:** Linear Thompson Sampling (often best performance) **Thompson Sampling often wins empirically** despite similar theoretical bounds to UCB. The self-tuning exploration is powerful in practice. --- ## Hyperparameter Tuning Guide ### General Tuning Strategy ```python def tune_hyperparameters(algorithm_class, param_grid, data, metric='reward'): """ Simple hyperparameter tuning via grid search. Args: algorithm_class: Bandit algorithm class param_grid: Dict of parameter ranges to try data: Historical data (context, action, reward) tuples metric: What to optimize ('reward', 'regret', etc.) Returns: best_params: Best hyperparameter configuration results: Performance for all configurations """ from itertools import product # Generate all combinations param_names = list(param_grid.keys()) param_values = list(param_grid.values()) combinations = list(product(*param_values)) results = [] for combo in combinations: params = dict(zip(param_names, combo)) # Initialize algorithm with these params bandit = algorithm_class(**params) # Replay historical data total_reward = 0 for context, action, reward in data: # Only update if this is what bandit would have chosen chosen_action = bandit.select_action(context) if chosen_action == action: bandit.update(action, context, reward) total_reward += reward results.append({ 'params': params, 'reward': total_reward, 'coverage': sum(1 for c, a, r in data if bandit.select_action(c) == a) / len(data) }) # Find best best = max(results, key=lambda x: x[metric]) return best['params'], results # Example usage param_grid = { 'n_actions': [10], # Fixed 'n_features': [20], # Fixed 'alpha': [0.5, 1.0, 2.0], 'lambda_': [0.1, 1.0, 10.0] } # best_params, all_results = tune_hyperparameters(LinUCB, param_grid, historical_data) ``` ### Parameter Guidelines | Algorithm | Parameter | Default | When to Increase | When to Decrease | |-----------|-----------|---------|------------------|------------------| | **ε-greedy** | ε | 0.1 | Regret growing linearly | Too many suboptimal actions | | **LinUCB** | α | 1.0 | Under-exploration (linear regret) | Over-exploration (slow convergence) | | **LinUCB** | λ | 1.0 | High variance, correlated features | Underfitting, slow learning | | **Thompson** | λ | 1.0 | High variance | Underfitting | | **Thompson** | noise_var | 1.0 | Rewards more noisy | Rewards less noisy | ### Quick Diagnostic Checklist | Symptom | Likely Cause | Fix | |---------|--------------|-----| | **Regret grows linearly** | Under-exploration | Increase ε or α | | **High variance in estimates** | Need more regularization | Increase λ | | **Slow convergence** | Over-exploration | Decrease ε or α | | **All traffic on one action** | Premature convergence | Increase exploration | | **Random action selection** | Over-exploration | Decrease ε | --- ## Complete Working Example Here's a full example comparing all algorithms on the same problem: ```python import numpy as np import matplotlib.pyplot as plt # Simulate contextual bandit environment class SimulatedEnvironment: """Simulated environment for testing bandit algorithms.""" def __init__(self, n_actions=3, n_features=5): self.n_actions = n_actions self.n_features = n_features # True parameters (unknown to algorithms) self.true_theta = [ np.random.randn(n_features) for _ in range(n_actions) ] # Compute optimal expected reward for regret calculation self.optimal_expected_reward = None def get_context(self): """Generate random context.""" return np.random.randn(self.n_features) def get_reward(self, context, action): """Generate reward for context-action pair.""" true_reward = self.true_theta[action] @ context noise = np.random.normal(0, 0.1) return true_reward + noise def get_optimal_action(self, context): """Return optimal action for context (for regret calculation).""" expected_rewards = [theta @ context for theta in self.true_theta] return np.argmax(expected_rewards) def run_experiment(bandit, env, n_rounds=1000): """ Run bandit algorithm on environment. Returns: rewards: List of rewards per round regrets: List of regret per round actions: List of actions chosen per round """ rewards = [] regrets = [] actions_chosen = [] for t in range(n_rounds): # Get context context = env.get_context() # Bandit chooses action action = bandit.select_action(context) # Observe reward reward = env.get_reward(context, action) # Compute regret optimal_action = env.get_optimal_action(context) optimal_reward = env.get_reward(context, optimal_action) regret = optimal_reward - reward # Update bandit bandit.update(action, context, reward) # Log rewards.append(reward) regrets.append(regret) actions_chosen.append(action) return rewards, regrets, actions_chosen # Run comparison if __name__ == "__main__": n_rounds = 1000 n_actions = 3 n_features = 5 # Create environment env = SimulatedEnvironment(n_actions, n_features) # Test algorithms algorithms = { 'ε-greedy (ε=0.1)': EpsilonGreedy(n_actions, epsilon=0.1), 'LinUCB (α=1.0)': LinUCB(n_actions, n_features, alpha=1.0), 'Linear Thompson': LinearThompsonSampling(n_actions, n_features) } results = {} for name, bandit in algorithms.items(): rewards, regrets, actions = run_experiment(bandit, env, n_rounds) results[name] = { 'rewards': rewards, 'regrets': regrets, 'cumulative_regret': np.cumsum(regrets) } print(f"\n{name}:") print(f" Average reward: {np.mean(rewards):.3f}") print(f" Cumulative regret: {np.sum(regrets):.1f}") # Plot cumulative regret plt.figure(figsize=(10, 6)) for name, data in results.items(): plt.plot(data['cumulative_regret'], label=name, linewidth=2) plt.xlabel('Round') plt.ylabel('Cumulative Regret') plt.title('Algorithm Comparison: Cumulative Regret Over Time') plt.legend() plt.grid(True, alpha=0.3) plt.tight_layout() plt.savefig('bandit_comparison.png', dpi=150) print("\nPlot saved as 'bandit_comparison.png'") ``` --- ## Key Takeaways **Essential concepts:** **Start simple, upgrade deliberately** - Begin with ε-greedy to validate infrastructure - Upgrade to LinUCB for production baseline - Use Thompson Sampling for best empirical performance **Algorithm selection depends on your problem:** - No context → ε-greedy or UCB1 - Linear context (d < 500) → LinUCB or Linear Thompson - Need interpretability → LinUCB (examine θ weights) - Maximum performance → Thompson Sampling **Default hyperparameters work well:** - ε-greedy: ε = 0.1 with decay 1/t - LinUCB: α = 1.0, λ = 1.0 - Thompson: Use defaults, tune only if needed **Tuning based on symptoms:** - Linear regret → Increase exploration (ε or α) - High variance → Increase regularization (λ) - Slow convergence → Decrease exploration **Thompson Sampling often wins in practice** - Self-tuning exploration (no manual decay) - Best empirical results in many domains - Slight computational overhead worth it **Practical workflow:** 1. **Week 1:** Implement ε-greedy, validate logging 2. **Week 2:** Upgrade to LinUCB or Thompson 3. **Week 3:** Tune hyperparameters on historical data 4. **Week 4:** Deploy to production with monitoring --- ## Further Reading **Code repositories:** - **[Vowpal Wabbit](https://github.com/VowpalWabbit/vowpal_wabbit)**: Production-grade bandit library from Microsoft - **[Contextualbandits](https://github.com/david-cortes/contextualbandits)**: Python library with scikit-learn integration - **[PyMC Bandits](https://github.com/pymc-devs/pymc-experimental)**: Bayesian bandit implementations **Papers on these algorithms:** - **ε-greedy:** Classic exploration strategy, no single paper - **UCB1:** [Auer et al., 2002](https://link.springer.com/article/10.1023/A:1013689704352) - **LinUCB:** [Li et al., 2010](https://arxiv.org/abs/1003.0146) - **Thompson Sampling:** [Agrawal & Goyal, 2013](http://proceedings.mlr.press/v28/agrawal13.html) **Blog posts:** - [Lil'Log: Multi-Armed Bandits](https://lilianweng.github.io/posts/2018-01-23-multi-armed-bandit/) - [Jeremy Kun: Bandits Series](https://jeremykun.com/tag/multi-armed-bandit/) ---Article series

Adaptive Optimization at Scale: Contextual Bandits from Theory to Production

- Part 1 When to Use Contextual Bandits: The Decision Framework

- Part 2 Contextual Bandit Theory: Regret Bounds and Exploration

- Part 3 Implementing Contextual Bandits: Complete Algorithm Guide

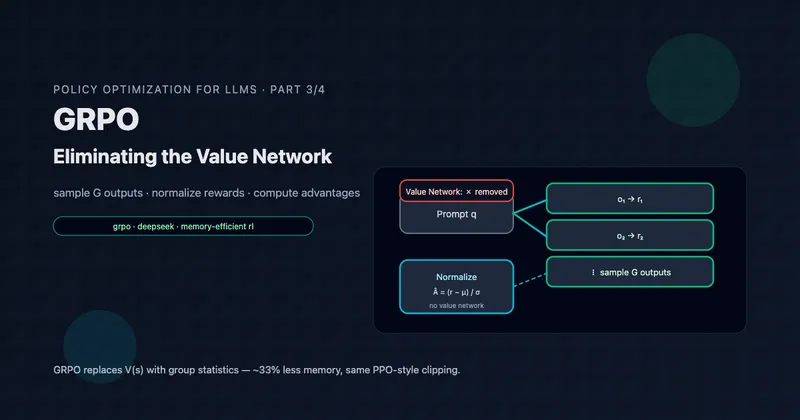

- Part 4 Neural Contextual Bandits for High-Dimensional Data

- Part 5 Deploying Contextual Bandits: Production Guide and Offline Evaluation