Deploying Contextual Bandits: Production Guide and Offline Evaluation

Systems design, offline evaluation, and monitoring strategies for running contextual bandits safely in production.

Systems design, offline evaluation, and monitoring strategies for running contextual bandits safely in production.

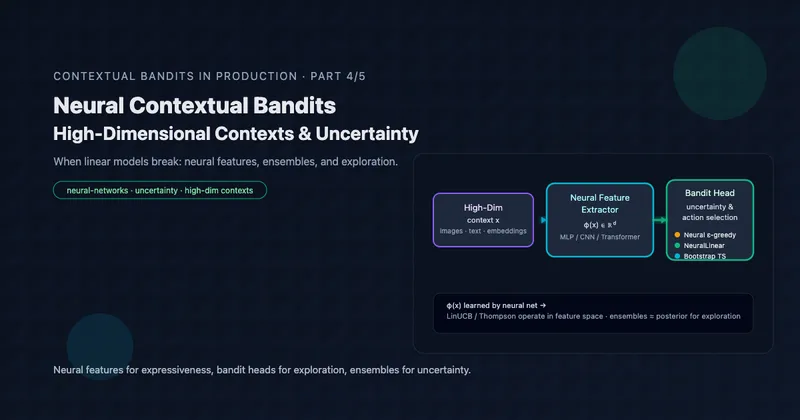

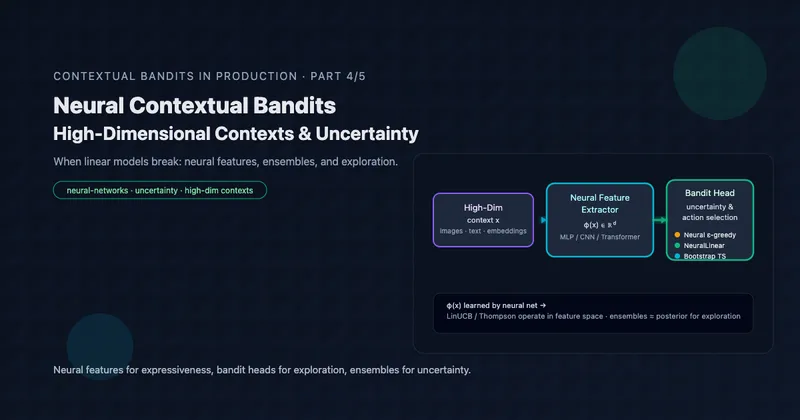

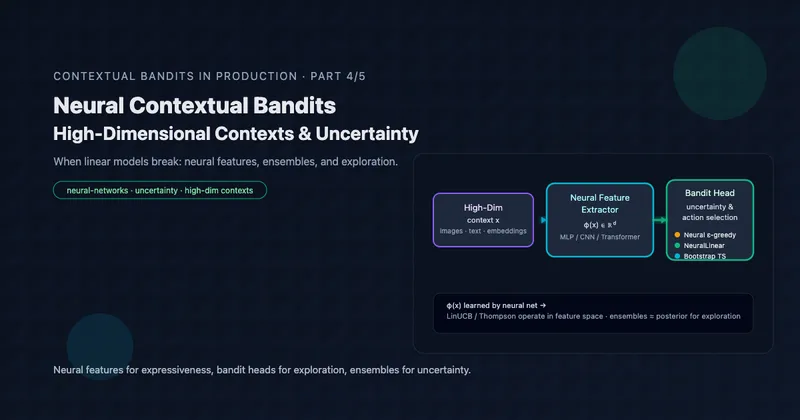

When linear models fail, neural networks step in. Learn when to use neural bandits, how to quantify uncertainty with bootstrap ensembles, and handle high-dimensional action spaces with embeddings and two-stage selection.

Complete Python implementations of ε-greedy, UCB, LinUCB, and Thompson Sampling. Learn which algorithm to use for your problem with default hyperparameters and practical tuning guidance.

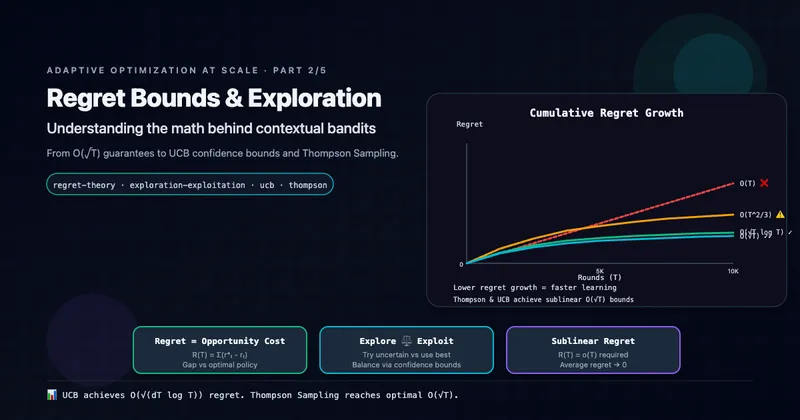

Understand the theory behind contextual bandits: regret bounds, the exploration-exploitation tradeoff, reward models, and why certain algorithms work. Math that directly informs practice.

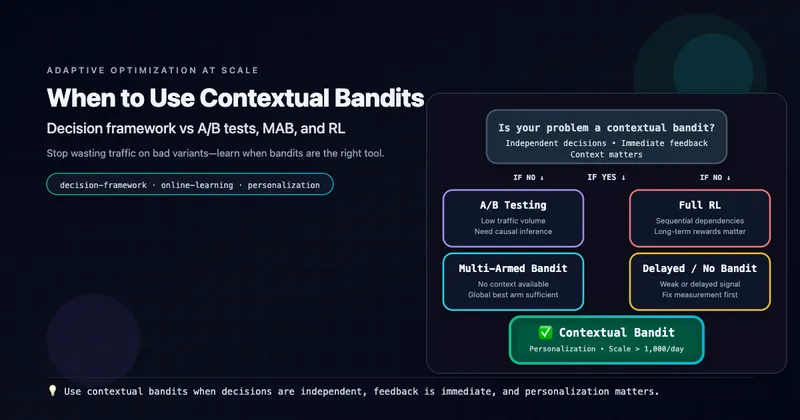

Stop running month-long A/B tests that leave value on the table. Learn when contextual bandits are the right choice for adaptive, personalized optimization—and when to stick with simpler alternatives.